Learning Hypernyms : Assignment 8

In linguistics, Hypernymy is an important lexical-semantic relationship that captures the type-of relation. In this relation, a hyponym is a word or phrase whose semantic field is included within that of another word, its hypernym. For example, rock, blues and jazz are all hyponyms of music genre (hypernym).

In this assignment, we will examine unsupervised techniques to automatically extract a list of word pairs that satisfy the hypernymy relation using a large corpus. Specifically, we will use

- A rule-based technique using lexico-syntactic patterns to extract hyponym-hypernym word pairs.

- Another rule-based technique but using dependency-paths

- A DIY approach where you can use any supervised/unsupervised technique extract hypernym-hyponym word pairs.

In this assignment, we will be using nltk. You can install nltk and the relavant tagger using this command:

pip install nltk

$ python

>>> import nltk

>>> nltk.download('punkt')

>>> nltk.download('averaged_perceptron_tagger')

Here are the materials that you should download for this assignment:

lexicalinference.zipContains all the relevant code.bless2011/Contains the train, validation and test data. In .tsv train and validation files, word1 is the hyponym of word2 if its label is True, if it is False, they aren’t related.wikipedia_sentences.txtContains tokenized relevant wikipedia sentences. A lemmatized version also exists.new_wikipedia_deppaths.txtContains word pairs and the shortest dependency path between them as extracted using spaCy

Part 1: Hearst Patterns for Hypernym Learning

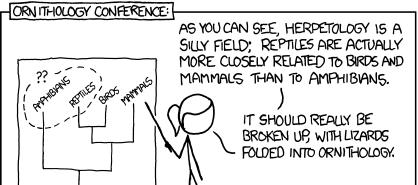

Marti Hearst, in her classic 1992 COLING paper Automatic Aquisition of Hyponyms from Large Text Cororpa, described how lexico-syntactic patterns can be used for hyponym acquisition.

Consider the sentence,

How do I distinguish among different musical genres such as blues, rock, and jazz, etc., and is there a good listeners’ trick to discern such distinctions?

Upon reading, we can easily infer that blues, rock and jazz are types of musical genres. Consider another sentence that contains a similar lexico-syntactic construction and expresses a hypernymy relation between green vegetables and spinach, peas, and kale.

I am going to get green vegetables such as spinach, peas and kale.

We can generalize this construction to

NP_0 such as NP_1, NP_2, ... (and | or) NP_n

where NP_0 is the hypernym of NP_1, NP_2, …, NP_n. Here, NP_x refers to a noun phrase or noun chunk.

As you can see in her paper, Hearst identified many such patterns, hence forth referred to as Hearst Patterns. Since these patterns are already manually constructed, we can use these patterns on a large corpus of unlabeled text for hyponym acquisition. For example, if our corpus only contained the sentences above, we could extract hypo-hypernym pairs such as

(blues, musical genres), (rock, musical genres), (jazz, musical genres), (spinach, green vegetables), (peas, green vegetables), (kale, green vegetables)

In this section of the assignment, we will use these patterns to extract hyper-hyponym word pairs from Wikipedia.

Evaluation Dataset

To evaluate the quality of our extraction, we will use a post-processed version of the BLESS2011 dataset. Levy et al. ‘15 post-processed to clean the dataset for reasons mentioned in the paper. Based on our extractions from a large corpus, we can label the test instances as a True hypo-hypernym extraction if it exists in the extracted list or as a False pair if it doesn’t. The data files are tab-separated with one-pair-per-line.

hyponym \t hypernym \t label

Unlabeled Wikipedia Corpus

As our large corpus to extract hyponyms, we will use Wikipedia text. Since Wikipedia is too large for efficient processing, we provide you with a pruned version. This only contains sentences that contain a word pair from train/val/test set. Each line in the file contains 2 tab-separated columns. The first column contains the tokenized sentence, and the second contains the lemmatized version of the same tokenized sentence.

How to Get Started

As you must have noticed, implementing Hearst Patterns requires noun-phrase chunking and then regex pattern matching where these patterns are relevant Hearst patterns.

In the code file hearst/hearstPatterns.py we use NLTK to implement a nltk-regex based noun-phrase chunker and Hearst pattern matching.

- This code first finds all the noun-chunks and converts the sentence into the following format,

I like to listen to NP_music from NP_musical_genres such as NP_blues , NP_rock and NP_jazz . - It then uses nltk-regex to find patterns defined in the list

self.__hearst_patterns. We have already implemented one pattern (NP_0 such as NP1, …) for you as:"(NP_\w+ (, )?such as (NP_\w+ ? (, )?(and |or )?)+)", "first"which will lead to extractions such as

('blues', 'musical genres'), ('rock', 'musical genres'), and ('jazz', 'musical genres')

Your job is to implement other Hearst Patterns and add them to the self.__hearst_patterns list.

To use the method above to perform large-scale extraction on Wikipedia, and evaluation against the provided dataset, we provide the following functions:

-

hearst/extractHearstHyponyms.py- Implements a method to apply the Hearst Patterns to all Wikipedia sentences, collect the extractions and write them to a file -

extractDatasetPredictions.py- Labels the train/val/test word-pairs as True (False) if they exist (don’t exist) in the extracted hypo-hypernym pairs -

computePRF.py- Takes the gold-truth and prediction file to compute the Precision, Recall and F1 score.

You should implement different Hearst Patterns, and/or come up with your own patterns by eyeballing Wikipedia data. Use the train and validation data to estimate the performance of different pattern combinations and submit the predictions from the best model on the test data as the file hearst.txt. The format of this file will the same as the train and validation data.

hyponym \t hypernym \t True(False)

Don’t panic if your validation performance is too low (~30%-36% for lemmatized corpus). Using the single pattern already implemented, you should get a validation score of 30% (lemmatized corpus).

In a writeup.pdf explain which patterns helped the most, and patterns that you came up with yourself.

Hint for post-processing extractions

As you might notice in the dataset, the (hypernym, hyponym) pair contains single, usually lemmatized, tokens. On the other hand, the extractions from our code extracts a lot of multi-word noun phrases. This could negatively affect our performance on the provided dataset. Our performance could drastically improve if we post-process our extractions to output only single token extractions.

You should be able to use your knowledge about noun-phrases (that the head of the noun-phrase usually is the last token) and lemmatization to figure out a post-processing methodology that might improve your performance. In the final report, explain your post-processing methodology, and make clear distinction between change in performance when the extractions were post-processed.

Part 2: Dependency Paths for Hypernym Learning

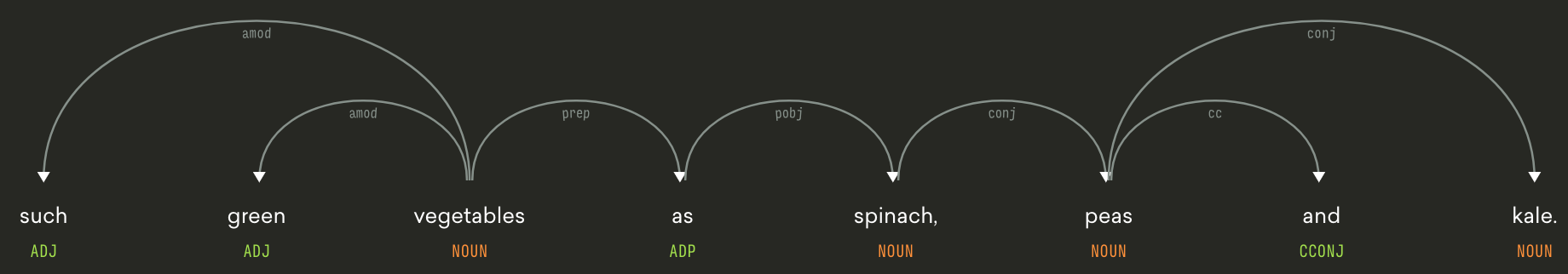

Consider the sentence snippet, … such green vegetables as spinach, peas and kale. and its dependency-parse using spaCy. Their visualization tool is called displaCy and can be accessed here: demos.explosion.ai/displacy

We (again) observe that there are dependency paths between vegetables and spinach, peas, and kale that can be typical in expressing hyper-hyponymy relation between words.

This observation was made in Snow et al.’s NIPS 2005 Learning syntactic patterns for automatic hypernym discovery paper. In this paper, the authors use the shortest dependency paths between noun-phrases to extract features and learn a classifier to predict whether a word-pair satisfies the hypo-hypernymy relation.

On the contrast, in this part, we will use the shortest dependency paths between noun (noun-chunk) pairs to predict hypernymy relation in an unsupervised manner. For example, in the example above the shortest dependency path between vegetables and spinach is

vegetables/NOUN -> Prep -> as/ADP -> pobj -> spinach/NOUN

For generalization, we can anonymize the start and end-points of the paths, as

X/NOUN -> Prep -> as/ADP -> pobj -> Y/NOUN

and can now predict that when a (X,Y) pair occurs in such a dependency-path, it usually means that X is the hypernym of Y.

Simply using the shortest dependency paths as two major issues that can be tackled in the following manner:

-

Better lexico-syntactic paths: Two words containing as in between is not a good indicator of the hypernymy relation being expressed and the word such outside the shortest path plays an important role. Snow et al. hence proposed Satellite edges, i.e. adding single edges to the left (right) of the leftmost (rightmost) token to the shortest-dependency path. In the example above, in addition to the shortest-path, the paths we extract will also contain satellite edges from vegetable -> green, vegetable -> such, and spinach -> peas.

-

Distributive Edges: The shortest path from vegetables from peas contains spinach/NOUN node connected via the conj edge. The presence of such specific words (spinach) in the path that do not inform of the hypernymy relation and negatively affect our extraction recall. The word spinach could be replaced with some other word, but the relation between peas and vegetables would still hold. Snow et al. proposed to add additional edges bypassing conj edges to mitigate this issue. Therefore, we can add edges of type pobj from vegetables to peas and kale.

Good News: You don’t need to extract such paths! We’ve extracted them for you for our Wikipedia corpus. You’re welcome! To keep the number of extracted paths tractable, the file new_wikipedia_deppaths.txt contains dependency paths extracted from Wikipedia between all train/val/test word pairs.

Similar to Snow et al., we only keep dependency paths of length 4 or shorter .

Each line of the file new_wikipedia_deppaths.txt contains three tab-separated columns, the first containing X, second Y and the third the dependency path.

An example path extraction is:

mammal fox such/ADJ/amod<_X/NOUN/dobj_<as/ADP/prep_<Y/NOUN/pobj

The path edges are delimited by the underscore ( _ ). Each edge contains word/POS_TAG/EdgeLabel. Hence the edges in the path are:

such/ADJ/amod<: An edge of type amod from the right side (X) to the word suchX/NOUN/dobj: An edge of type dobj from outside the shortest path span (since no direction marker (‘<’ or ‘>’) exists) to the token X<as/ADP/prep: An edge of type prep from as to the left of it (X).<Y/NOUN/pobj: An edge of type pobj from Y to the left of it (as)

How to proceed

Similar to how we used the Hearst Patterns to extract hypo-hypernym pairs, we will now use dependency-path patterns to do the same. Since it is difficult to come up with your own dependency path patterns, we suggest you use the the labeled training data to come up with a list of dependency-paths that are positive examples of paths between actual hyper-hyponym pairs.

-

depPath/extractRelevantDepPaths.py: Fill this python script, to extract relevant dependency paths fromnew_wikipedia_deppaths.txtusing the training data and store them to a file. Note that, these paths can be of different categories. For eg. Forward paths: Classify X/Y as Hyponym/Hypernym, Reverse paths: Classify X/Y as Hypernym/Hyponym, Negative paths: Classify X/Y as a negative pair etc. -

depPath/extractDepPathHyponyms.py: Complete this python script to generate a list of hypo-hypernym extractions for the wikipedia corpus (new_wikipedia_deppaths.txt) (similar to Hearst Patterns) -

extractDatasetPredictions.py: Similar to Hearst patterns, use this to label the train/val/test word-pairs as True (False) if they exist (don’t exist) in the extracted hypo-hypernym pairs. -

computePRF.py: Similar to Hearst Patterns, use this script to evaluate the performance on the given dataset

For the best performing dependency paths, submit the test predictions with the filename deppath.txt.

In the writeup.pdf explain few most occurring dependency paths and explain if they bear any correspondence to the Hearst patterns.

Part 3: DIY

In the previous two sections we saw how we can use manually extracted rule-based techniques to extract word-pairs satisfying hypernymy relations. In this section, you have to implement at least two additional methods to extract such word pairs.

A few of example ideas for additional techniques are:

- Combine the extractions from Hearst patterns and Dependency-path patterns for better extractions

- Learn a supervised classifier (similar to Snow et al) using the provided training data and features extracted from the dependency paths

- Use pre-trained word embeddings to learn a hypernymy prediction classifier, or combine word-embeddings as features to your dependency–path based classifier.

For this part, the test data predictions from the best performing technique should be uploaded to the relevant leaderboard as diy.txt.

In the writeup.pdf explain in detail the two methodologies implemented with performance analysis.

The Leaderboard

We will have one leaderboard for this assignment. You can use your DIY Model and submit a file called diy.txt to the leaderboard. We will give extra credit to the top teams on the leaderboard.

Deliverables

Here are the deliverables that you will need to submit.

- The writeup needs to be submit as

writeup.pdfto the HW8: Report Submission on gradescope: It must contains- Written analysis on additional Hearst Patterns implemented and which one worked the best/worst

- Written analysis commenting on the Precision/Recall values when using Hearst Patterns

- Written analysis on most frequent Dependency Paths that worked the best

- Written analysis commenting on the Precision/Recall values when using Dependency Paths

- Implementation details of the DIY models

- Performance analysis of the DIY models

- The prediction file

diy.txtto the leaderboard - Your code (.zip) with a

READMEto run. It should be written in Python 3. You must include the outputs of your training and validation files for all the models as described below. *The code structure must be as follows:-

/lexical inference -

/–/hearst -

/–/–hearstPatterns.py -

/–/–extractHearstHyponyms.py -

/–/depPath -

/–/–extractDepPathHyponyms.py -

/–/–extractRelevantDepPaths.py -

/–/diy -

/–/(All your diy files) -

/–extractDatasetPredictions.py -

/–computePRF.py -

/–hearst.txt -

/–hearst_train.txt -

/–hearst_val.txt -

/–deppath.txt -

/–deppath_train.txt -

/–deppath_val.txt -

/–diy.txt -

/–diy_train.txt -

/–diy_val.txt

-

FAQs

- How to run extractDatasetPredictions.py?

- extractDatasetPredictions.py labels the train/val/test word-pairs as True (False) if they exist (don’t exist) in the extracted hypo-hypernym pairs.

python extractDatasetPredictions.py --extractionsfile <path-to-hyp-hypernym-extractions-file> --trdata <path-to-bless2011/data_lex_train.tsv> --valdata <path-to-bless2011/data_lex_val.tsv> --testdata <path-to-bless2011/data_lex_test.tsv> --trpredfile <output-train-preductions-file> --valpredfile <output-val-preductions-file> --testpredfile <output-test-preductions-file>

- extractDatasetPredictions.py labels the train/val/test word-pairs as True (False) if they exist (don’t exist) in the extracted hypo-hypernym pairs.

- How to run computePRF.py?

- computePRF.py takes the gold-truth and prediction file to compute the Precision, Recall and F1 score.

python computePRF.py --goldfile <path-to-gold-truth-file> --predfile <path-to-prediction-file>

- computePRF.py takes the gold-truth and prediction file to compute the Precision, Recall and F1 score.

- Not getting 30% after running evaluation.

- You need to run on lemmatized sentences. Go into extractHearstHyponyms.py and make sure you are using lemmatized sentences, then re-run the extractions.

- Determining forward, reverse and negative dependency paths.

- Given a deppath, the direction of arrows is not the indicator of forward, reverse and negative dependency paths. So given “A B deppath”, If A is a hyponym of B, then this deppath is a forward dependency path. If B is a hyponym of A, then this deppath is a reverse dependency path. If A and B are false pairs, the deppath is negative. You need to extract relevant ones.

- We are expected to write analysis on most frequency paths that work the best. How to evaluate which dependency paths work the best?

- The dependency paths that resulted in high frequencies can be analysed.

- Tips for improving model in Part 2.

- Use different weights for forward and negative paths, weigh forward paths higher than negative paths.

- You never need to add a path that is only negative to relevant paths cause it is not relevant.

- If a path has been relevant mostly but sometimes negative it can still be included.

- Try to include forward paths which are not negative paths more number of times.

Recommended readings

| Relation Extraction (Section 21.2) Dan Jurafsky and James H. Martin. Speech and Language Processing (3rd edition draft) . |

| Learning syntactic patterns for automatic hypernym discovery Rion Snow, Daniel Jurafsky, Andrew Y. Ng. NIPS 2003. |

| Automatic acquisition of hyponyms from large text corpora Marti Hearst. COLING 1992. |

| Do Supervised Distributional Methods Really Learn Lexical Inference Relations? Omer Levy, Steffen Remus, Chris Beimann, Ido Dagan. NAACL 2015. |

| Improving Hypernymy Detection with an Integrated Path-based and Distributional Method Vered Shwartz, Yoav Goldberg and Ido Dagan. ACL 2016. |